The confluence of edge computing and artificial intelligence gives the birth of edge intelligence. Specifically, edge computing aims at coordinating a multitude of collaborative edge devices and servers to process the generated data in proximity. Data generated at the network edge need AI to fully unlock their potential.

Motivation and Benefits of Edge intelligence:

1.Data generated at the network edge need Artificial Intelligence to fully unlock their potential: As a result of the increasing number and types of mobile and IoT devices, large volumes of multi-data (e.g., audio, picture and video) of physical surroundings are continuously sensed at the device side. In this situation, AI will be functionally necessary due to its ability to quickly analyze those huge data volumes and extract insights from them for high-quality decision making. As one of the most 5 popular AI techniques, deep learning brings the ability to automatically identify patterns and detect anomalies in the data sensed by the edge device, as exemplified by population distribution, traffic flow, humidity, temperature, pressure and air quality. The insights extracted from the sensed data are then fed to the real-time predictive decision-making in response to the fast-changing environments, increasing the operational efficiency.

2.Edge computing is able to prosper AI with richer data and application scenarios: Edge computing is proposed to achieve low latency data processing by sinking the computing capability from the cloud data center to the edge side, i.e., data generation source, which may enable AI processing with high performance.

3.AI democratization requires edge computing as key infrastructure: Compared to the cloud data center, edge servers are in closer proximity to people, data source and devices. Secondly, compared to cloud computing, edge computing is also more affordable and accessible. Finally, edge computing has the potential to provide more diverse application scenarios of AI than cloud computing.

4. Edge computing can be popularized with AI applications: As an emerging application built on top of computer vision, real-time video analytics continuously pulls high-definition videos from surveillance cameras and requires high computation, high bandwidth, high privacy and low-latency to analyze the videos. The one viable approach that can meet these strict requirements is edge computing.

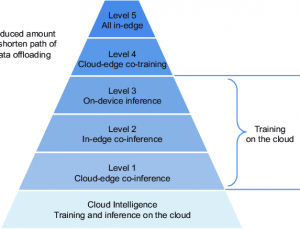

Levels of Edge Intelligence:

It’s important to note that Edge Intelligence isn’t restricted to just processing in the edge. Sometimes, it may be ideal to process in the cloud or on-device as well as the edge.

1. Cloud Intelligence: training and inferencing the DNN model fully in the cloud.

2. Level-1 Cloud-Edge Co-Inference and Cloud Training: Training the DNN model in the cloud, but inferencing the DNN model in an edge-cloud cooperation manner. Here edge-cloud cooperation means that data is partially offloaded to the cloud.

3. Level-2 In-Edge Co-Inference and Cloud Training: Training the DNN model in the cloud, but inferencing the DNN model in an in-edge manner. Here in-edge means that the model inference is carried out within the network edge, which can be realized by fully or partially offloading the data to the edge nodes or nearby devices (via D2D communication).

4. Level-3 On-Device Inference and Cloud Training: Training the DNN model in the cloud, but inferencing the DNN model in a fully local on-device manner. Here on-device means that no data would be offloaded.

5. Level-4 Cloud-Edge Co-Training & Inference: Training and inferencing the DNN model both in the edge-cloud cooperation manner.

6. Level-5 All In-Edge: Training and inferencing the DNN model both in an in-edge manner.

7. Level-6 All On-Device: Training and inferencing the DNN model both in an on-device manner.

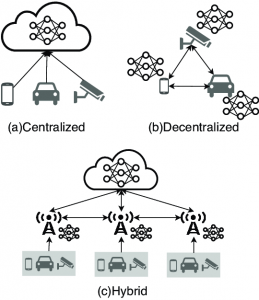

Edge Intelligence Model Training:

There are three primary architectures pertaining to training models for Edge Intelligence:

1.Centralized: Training on the cloud

2. Decentralized: Training local models on-device

3. Hybrid: Training on edge servers and the cloud

Future Contributions in Edge Intelligence:

1. Having programming platforms that are heterogeneous-friendly and can be compared through certain metrics will help design more efficient Edge Intelligent systems.

2. AI models that are resource-aware or resource-friendly will greatly benefit Edge Intelligence by running effectively on low-resource or constrained systems.

3. Computation-aware networking techniques will be useful in such complex and heterogeneous systems. 5G is taking these steps forward with its ultra-reliable low-latency communication technology among other enhancements.

4. Defining clear design tradeoffs for models used in Edge Intelligence will allow developers to implement the correct model to suit a specific need.

5. Managing resources and services for users in need of Edge Intelligence will help make the applications more desirable and practical.

6. Maintaining user privacy and security is of utmost importance to ensure that users using Edge Intelligence technologies will continue to trust them.