When Robert Whitehead invented the self-propelled torpedo in the 1860s, the early guidance system for maintaining depth was so new and essential he called it “The Secret.” Airplanes got autopilots just a decade after the Wright brothers. These days, your breakfast cereal was probably gathered by a driverless harvester. Sailboats have autotillers. Semi-autonomous military drones kill from the air, and robot vacuum cleaners confuse our pets.

In the past five years, autonomous driving has gone from “maybe possible” to “definitely possible” to “inevitable” to “how did anyone ever think this wasn’t inevitable?” Every significant automaker is pursuing the tech, eager to rebrand and rebuild itself as a “mobility provider” before the idea of car ownership goes kaput. Waymo, the company that emerged from Google’s self-driving car project, has been at it the longest, but its monopoly has eroded, of late. Ride-hailing companies like Waymo, Lyft and Uber are hustling to dismiss the profit-gobbling human drivers who now shuttle their users about. Tech giants like Intel, IBM, and Apple are looking to carve off their slice of the pie as well. Countless hungry startups have materialized to fill niches in a burgeoning ecosystem, focusing on laser sensors, compressing mapping data, and setting up service centers to maintain the vehicles.

The autonomous industry saw a rise after a group of students from Stanford University won the DARPA grand challenge in 2007 which included pioneers such as Sebastian Thrun, Mike Montemerlo, Hendrik Dahlkamp, and David Stavens. Shortly, Sebastian Thrun was hired by Google to work on their own self-driving cars which ignited the spark of getting self-driving fleets on the road.

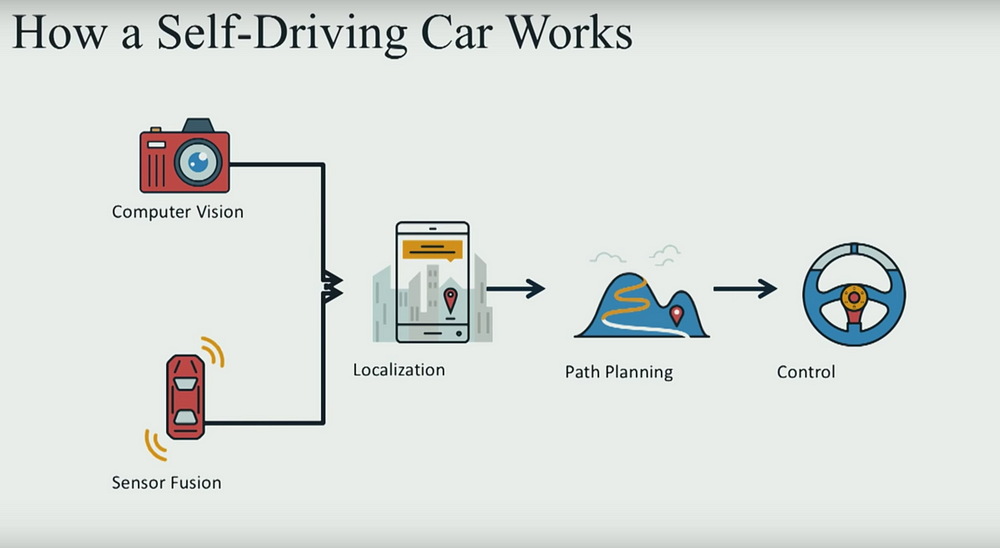

So, how exactly does an autonomous vehicle work?

There are 5 core components:

- COMPUTER VISION :

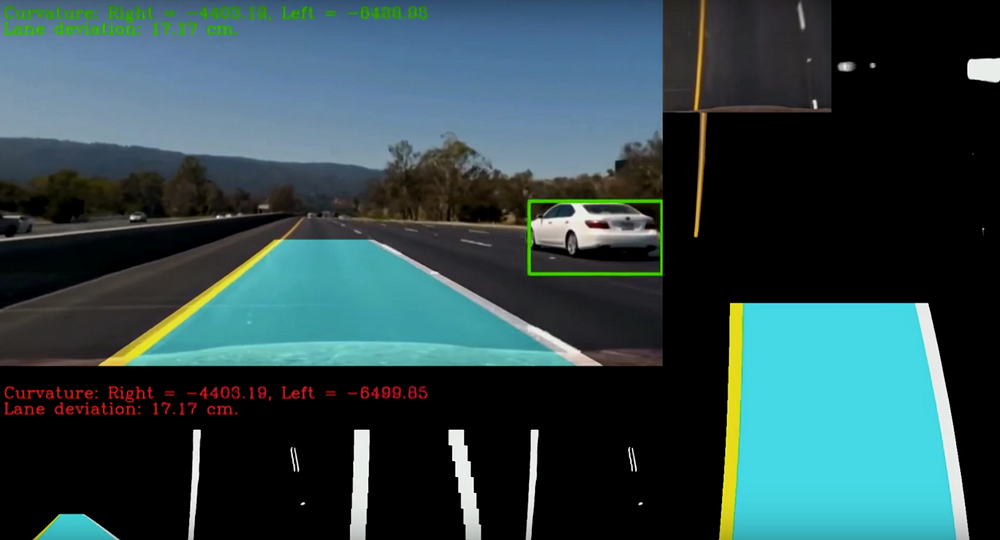

Computer Vision tells the car how the world around it looks like. Typically, a camera is fitted on the top of a car which captures the images in real time and runs a Deep Neural-Network to find objects close to the car. Typical use case of such would be Object-Detection or Lane-Segmentation. - SENSOR FUSION :

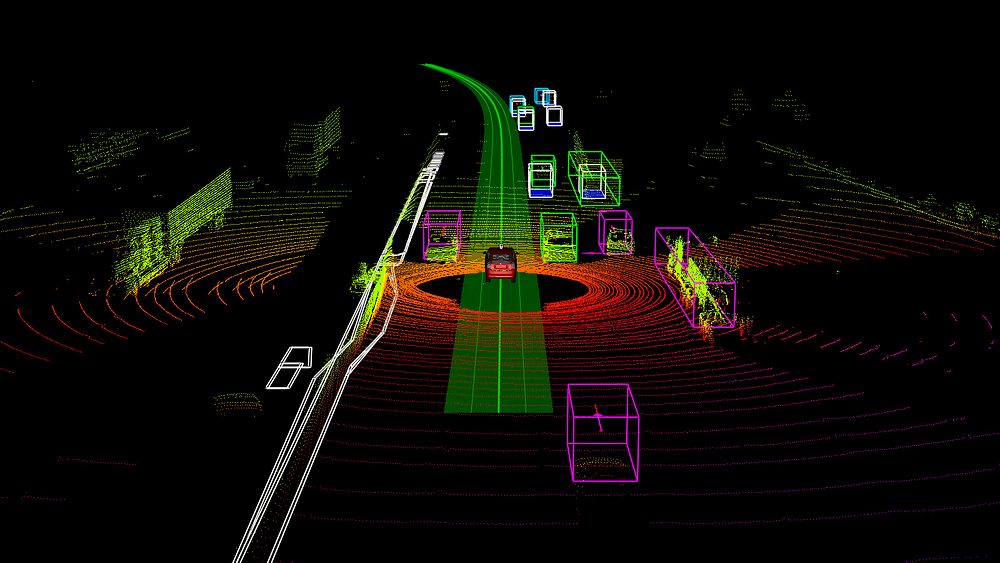

Sensor Fusion typically incorporates Lasers,Radar, Lidar which emits signals to get a richer understanding of the things around the vehicle. Once we’ve understood the environment around us we then move on to localization. - LOCALIZATION :

Localization helps the car understand where exactly we are in the world. A simple way how a car understands localization would be its GPS coordinates.

- PATH-PLANNING : Once the basic groundwork has been done, now comes the most important part i.e. path-planning.Path-planning involves basic understanding on how we intend the self-driving car to move and in which direction. An example would be using the previous Lane-Segmentation to move on that lane.

- CONTROL : This is the final step for the self-driving car which in which the car needs to understand the accurate steering angle needed, when to hit the throttle or the brakes etc in order to execute the trajectory the car wishes to follow.

So, that’s pretty much how self-driving cars work at a high-level. Everything else that self-driving cars do are more sophisticated implementations of these key components.

See you next time!

– Siddhartha Chowdhuri